Get Complete Project Material File(s) Now! »

Event Extraction approaches

In order to better generalize the systems developed for the Event Extraction task, one can divide the prior work in: pattern-based systems [Krupka et al., 1991, Hobbs et al., 1992, Riloff, 1996a, Riloff, 1996b, Yangarber et al., 2000], machine learning systems based on engineered features (i.e. feature-based) [Freitag, 1998, Chieu et al., 2003, Surdeanu et al., 2006, Ji et al., 2008, Patwardhan and Riloff, 2009, Liao and Grishman, 2010, Huang and Riloff, 2011, Hong et al., 2011, Li et al., 2013b, Bronstein et al., 2015] and neural-based approaches [Chen et al., 2015b, Nguyen and Grishman, 2015a, Nguyen et al., 2016a, Feng et al., 2016].

Pattern-based approaches

In light of the high annotation cost of expert manual annotations, several patternbased (rule-based) systems have been proposed, to speed up the annotation process.

The pattern-based approaches first acquire a set of patterns, where the patterns consist of a predicate, an event trigger, and constraints on its local syntactic context.

They also include a rich set of ad-hoc lexical features (e.g. compound words, lemma, synonyms, Part-of-Speech (POS) tags), syntactic features (e.g. grammar-level features, dependency paths) and semantic features (e.g., features from a multitude of sources, WordNet 6, gazetteers) to identify role fillers. Earlier pattern-based extraction systems were developed for the MUC conferences [Krupka et al., 1991, Hobbs et al., 1992, Riloff, 1996a, Yangarber et al., 2000].

For instance, the AutoSlog system [Riloff, 1996b] automatically created extraction patterns that could be used to construct dictionaries of important elements for a particular domain and a text where the elements of interest were manually tagged only for the training stage. Later, [Riloff, 1996a] makes the observation that patterns occurring with substantially higher frequency in relevant documents than in irrelevant documents are likely to be good extraction patterns. They propose the

separation between relevant and irrelevant syntactic patterns and a re-ranking of the patterns. The system named AutoSlog-TS attempted to overcome the necessity of having a hand-labeled input requiring only pre-classified texts and a set of generic syntactic patterns. The main drawback of this system is the requirement of manual inspection of the patterns, which can be costly. Many proposed approaches targeted the minimization of human supervision with a bootstrapping technique for event extraction. [Huang and Riloff, 2012a] proposed a bootstrapping method to extract event arguments using only a small amount of annotated data. After the manual inspection of the patterns, another effort was made for performing manual filtering of resulting irrelevant patterns.

Event extraction evaluation

As mentioned at the beginning of this chapter, the event extraction task has been developed through several evaluations, mainly MUC, ACE, and TAC. Each evaluation defined specific metrics and rules but the work coming after ACE generally adopted those used in [Ji et al., 2008], which are globally close to those of TAC.

More precisely, the evaluation in this context is based on event mentions and event arguments. An event mention is a phrase or a sentence within which an event is described, including trigger and arguments. The triggers are words that most clearly express the event and can have an arbitrary number of arguments.

In-depth analysis of the state-of-the-art approaches

So far, we discussed the previous systems developed for the Event Extraction (EE) task in relation to the main challenges, the high cost of manual annotation of data, the complex feature engineering.

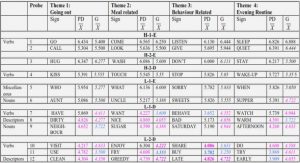

In order to position our work, we continue with an in-depth analysis of the systems on the ACE 2005 Dataset in Table 2.2.

— pipeline, where the subtask of argument role prediction follows the event detection one: [Liao and Grishman, 2010, Hong et al., 2011] (2, 3), MaxEnt with local features in [Li et al., 2013b] and the dynamic multi-pooling CNN in [Chen et al., 2015b] (1, 5).

— joint inference, where the prediction of triggers and arguments are performed at the same time: joint beam search with local and global features in [Li et al., 2013b] and joint Recurrent Neural Networks (RNNs) in [Nguyen et al., 2016a] (4, 6).

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are MLPs with a special structure. CNNs are a type of feed-forward neural networks whose layers are formed by a convolution operation followed by a pooling operation [LeCun et al., 1998a, Kalchbrenner et al., 2014]. This means that the first layers do not use all input features at the same time but rather features that are connected. This type of neural network is a well-known deep learning architecture inspired by the natural visual perception mechanism of the living creatures. CNNs became the mainstream method for solving various computer vision problems, such as image classification [Russakovsky et al., 2015], object detection [Russakovsky et al., 2015, Everingham et al., 2010], semantic segmentation [Dai et al., 2016], image retrieval [Tolias et al., 2015], tracking [Nam and Han, 2016], text detection [Jaderberg et al., 2014] and many others. At first, in 1990, [LeCun et al., 1990] published the seminal paper establishing the modern framework of CNN, and later improved it in [LeCun et al., 1998a]. They developed a multi-layer neural network called LeNet-5 which could classify handwritten digits. This is a highly influential paper that promoted deep convolutional neural networks for image processing. Two factors made this possible: firstly, the availability of large enough datasets and secondly, the development of powerful enough GPUs to efficiently train large networks.

Table of contents :

Résumé

Acknowledgements

1 Introduction

1.1 Contributions of this thesis

1.2 Structure and outline of the thesis

2 State-of-the-art

2.1 Event Extraction

2.1.1 Event Extraction approaches

2.1.2 Pattern-based approaches

2.1.3 Feature-based approaches

2.1.4 Neural-based approaches

2.2 Event extraction evaluation

2.3 In-depth analysis of the state-of-the-art approaches

2.4 Conclusions

3 Event Detection

3.1 Background theory

3.1.1 Multi-Layer Perceptrons (MLPs)

3.1.2 Training neural networks

3.1.3 Optimization problem

3.1.4 Regularization

3.1.5 Gradient-based learning

3.1.6 Convolutional Neural Networks (CNNs)

3.2 Word embeddings

3.2.1 Neural language model

3.2.2 Collobert&Weston (C&W)

3.2.3 Word2vec

3.2.4 FastText

3.2.5 Dependency-based

3.2.6 GloVe

3.2.7 Conclusions

3.3 Event Detection

3.3.1 What is an event trigger?

3.3.2 Corpus analysis

3.3.3 Event Detection CNN

3.3.4 Evaluation framework

3.3.5 Results and comparison with existing systems

3.3.6 Word embeddings analysis

3.3.7 Retrofitting

3.4 Conclusions

4 Deep neural network architectures for Event Detection

4.1 Background theory

4.1.1 Recurrent Neural Networks (RNNs)

4.1.2 Long Short-Term Memory Units (LSTMs)

4.1.3 Bidirectional RNNs

4.1.4 Training RNNs

4.2 Exploiting sentential context in Event Detection

4.2.1 Sentence embeddings

4.2.2 Sentence encoder

4.2.3 Event Detection with sentence embeddings

4.2.4 Results

4.3 Exploiting the internal structure of words for Event Detection

4.3.1 Character-level CNN

4.3.2 Model

4.3.3 Data augmentation

4.3.4 Results

4.4 Conclusions

5 Argument role prediction

5.1 What are event arguments?

5.2 Links with Relation Extraction

5.3 CNN model for argument role prediction

5.4 The influence of Event Detection on arguments

5.5 Results

5.6 Conclusions

6 Conclusions

6.1 Summary of the contributions of the thesis

6.2 Future work

Bibliography