Get Complete Project Material File(s) Now! »

Neural Network Language Models

Connectionist language modeling is another approach worth mentioning, although it had received little attention in handwriting applications so far. These models are more often found in the automatic translation or speech recognition literature. They are based on neural networks.

The basic idea of these methods is to project each word of the history in a continuous space and to perform a classification with neural networks to predict the next word. For example, Schwenk & Gauvain (2002); Bengio et al. (2003) use a multi-layer perceptron on the projection of the last n words. Mikolov et al. (2010) predict the next word with a recurrent neural network, which prevents from defining a fixed history, it being encoded in the memory of the recurrent layer.

Since the output space is very large in large vocabulary applications, special output structures have been proposed to train and use the network efficiently, e.g. in (Morin & Bengio, 2005; Mikolov et al., 2011; Le et al., 2011). In (Graves et al., 2013a), the acoustic and language models are integrated in a single recurrent neural network.

Open-Vocabulary Approaches

We have introduced n-gram models (or language models in general) for word sequences, but they can as well serve to model sequences of characters. The advantages of working with characters rather than words are multiple. First, as already mentioned for recognition models, there are less different tokens, and much more data for character modeling. Therefore, we can estimate more reliable probability distributions, and build higher order n-grams. Moreover, although the outputed words will not necessarily valid ones, there would be not such things as OOVs.

Several works considered mixing word LMs limited to a vocabulary, and character LMs to recognize potential OOVs. For example, in his PhD thesis, Bazzi (2002) proposed a model where the lexicon (list of words) and filler model (looping on phones) were examined. The word language model contains an OOV token guiding the search towards the phone language model. In FST search graphs (or FST representations of language models), replacing all OOV arcs by the character LM might consume too much memory. For handwriting recognition, Kozielski et al. (2013b) use a dynamic decoder, where the integration of the LM is done on the fly, which enables them to explore the character LM only when the OOV arc is explored. Messina & Kermorvant (2014) propose an over-generative method which limits the number of places where the character LM should be inserted, allowing this hybrid LM to be plugged in static decoding graphs.

Measuring the Quality of the Recognition

In order to assess the quality of a recognition system, a measure of the performance is required. For isolated character or word recognition, the mere accuracy (proportion of correctly recognized items) is sufficient. In the applications considered in this thesis, the transcript is a sequence of words. Counting the number of completely correct sequences is too coarse, because there would be no difference between a sentence with no correct word and another with only one misrecognition.

In a sequence, we do not only find incorrect words, but there might also be inserted or deleted words. Measures such as precision and recall may take these types of errors into account, but not the sequential aspect. The most popular measure of error, used in international evaluations of handwriting or speech recognition, is based on the Levenstein edit distance (Levenshtein, 1966).

Hidden Markov Models for Handwriting Recognition

The problem of handwritten text line recognition is now widely approached with hidden Markov models (Plötz & Fink, 2009) (the first applications of HMMs to that problem date from (Levin & Pieraccini, 1992; Kaltenmeier et al., 1993)). These models are designed to handle sequential data, with hidden states emitting observations. There are well-known techniques to (i) compute the probability of an observation sequence given a model, (ii) find the sequence of states which is most likely to have produced an observed sequence, and (iii) find the parameters of the model to maximize the probability of observing a sequence (Rabiner & Juang, 1986). The third problem is the actual training of these models, while the second allows to decode a sequence of observation. In this paradigm, the characters are each represented by a hidden Markov model. A simple concatenation of these produce word models. The advantages are twofold:

• from a few character models (around one hundred), we can build word models for potentially any word of the language. Thus this method is much more scalable the large vocabulary problems than systems attempting to model each word separately

• recognizing a word from character models does not require a prior segmentation of the word image into characters. Since the word model is a hidden Markov model of its own, the segmentation into characters is a by-product of the decoding procedure, which consists in finding the most likely sequence of states.

Neural Networks for Handwriting Recognition

Neural Networks (NNs) are popular systems for pattern recognition in general. They are made from basic processing units, linked to each other with weighted and directed connections, such that the output of some units are inputs to others. The appellation “(Artificial) Neural Network” comes from the similarity between the units of these models and biological neurons.

The first formal description of artificial neural networks was proposed by McCulloch & Pitts (1943). Algorithms to adjust the weights of the connections lead to the perceptron (Rosenblatt, 1958), and Multi-Layer Perceptrons (MLPs, Rumelhart et al. (1988)). Among different kinds of neural networks, Recurrent Neural Networks (RNNs), able to process sequences, and Convolutional Neural Networks (ConvNNs, (LeCun et al., 1989)), suited to image inputs, are worth noticing.

Table of contents :

List of Tables

List of Figures

Introduction

I HANDWRITING RECOGNITION — OVERVIEW

1 Offline Handwriting Recognition – Overview of the Problem

1.1 Introduction

1.2 Preliminary Steps to Offline Handwriting Recognition

1.3 Reducing Handwriting Variability with Image Processing Techniques .

1.3.1 Normalizing Contrast

1.3.2 Normalizing Skew

1.3.3 Normalizing Slant

1.3.4 Normalizing Size

1.4 Extraction of Relevant Features for Handwriting Recognition

1.4.1 Text Segmentation for Feature Extraction

1.4.2 Features for Handwriting Representation

1.5 Modeling Handwriting

1.5.1 Whole-Word Models

1.5.2 Part-Based Methods

1.5.3 Segmentation-Free Approach

1.6 Modeling the Language to Constrain and Improve the Recognition

1.6.1 Vocabulary

1.6.2 Language Modeling

1.6.3 Open-Vocabulary Approaches

1.7 Measuring the Quality of the Recognition

1.8 Conclusion

2 Handwriting Recognition with Hidden Markov Models and Neural Networks

2.1 Introduction

2.2 Hidden Markov Models for Handwriting Recognition

2.2.1 Definition

2.2.2 Choice of Topology

2.2.3 Choice of Emission Distribution

2.2.4 Model Refinements

2.2.5 Decoding

2.3 Neural Networks for Handwriting Recognition

2.3.1 The Multi-Layer Perceptron

2.3.2 Recurrent Neural Networks

2.3.3 Long Short-Term Memory Units

2.3.4 Convolutional Neural Networks

2.4 Handwriting Recognition Systems with Neural Networks

2.4.1 The Hybrid NN/HMM scheme

2.4.2 Predicting Characters

2.4.3 NN Feature Extractors

2.5 Training Models

2.5.1 Training Hidden Markov Models with Generative Emission Models

2.5.2 Training Neural Networks

2.5.3 Training Deep Neural Networks

2.5.4 Training Complete Handwriting Recognition Systems

2.6 Conclusion

II EXPERIMENTAL SETUP

3 Databases and Software

3.1 Introduction

3.2 Databases of Handwritten Text

3.2.1 Rimes

3.2.2 IAM

3.2.3 Bentham

3.3 Software

3.4 A Note about the Experimental Setup in the Next Chapters

4 Baseline System

4.1 Introduction

4.2 Preprocessing and Feature Extraction

4.2.1 Image Preprocessing

4.2.2 Feature Extraction with Sliding Windows

4.3 Language Models

4.3.1 Corpus Preparation and Vocabulary Selection

4.3.2 Language Models Estimation

4.3.3 Recognition Output Normalization

4.4 Decoding Method

4.5 A GMM/HMM baseline system

4.5.1 HMM topology selection

4.5.2 GMM/HMM training

4.5.3 Results

4.6 Conclusion

III DEEP NEURAL NETWORKS IN HIDDEN MARKOV MODEL SYSYEMS

5 Hybrid Deep Multi-Layer Perceptrons /HMMfor Handwriting Recognition

5.1 Introduction

5.2 Experimental Setup

5.3 Study of the Influence of Input Context

5.3.1 Alignments from GMM/HMM Systems

5.3.2 Handcrafted Features

5.3.3 Pixel Intensities

5.4 Study of the Impact of Depth in MLPs

5.4.1 Deep MLPs

5.4.2 Deep vs Wide MLPs

5.5 Study of the Benefits of Sequence-Discriminative Training

5.6 Study of the Choice of Inputs

5.7 Conclusion

6 Hybrid Deep Recurrent Neural Networks / HMM for Handwriting Recognition

6.1 Introduction

6.2 Experimental Setup

6.2.1 RNN Architecture Overview

6.2.2 Decoding in the Hybrid NN/HMM Framework

6.3 Study of the Influence of Input Context

6.3.1 Including Context with Frame Concatenation

6.3.2 Context through the Recurrent Connections

6.4 Study of the Influence of Recurrence

6.5 Study of the Impact of Depth in BLSTM-RNNs

6.5.1 Deep BLSTM-RNNs

6.5.2 Deep vs Wide BLSTM-RNNs

6.5.3 Analysis

6.6 Study of the Impact of Dropout

6.6.1 Dropout after the Recurrent Layers

6.6.2 Dropout at Different Positions

6.6.3 Study of the Effect of Dropout in Complete Systems (with LM)

6.7 Study of the Choice of Inputs

6.8 Conclusion

IV COMPARISON AND COMBINATION OF DEEP MLPs AND RNNs

7 Experimental Comparison of Framewise and CTC Training

7.1 Introduction

7.2 Experimental Setup

14 Contents

7.3 Relation between CTC and Forward-Backward Training of Hybrid NN/ HMMs

7.3.1 Notations

7.3.2 The Equations of Forward-Backward Training of Hybrid NN/ HMMs

7.3.3 The Equations of CTC Training of RNNs

7.3.4 Similarities between CTC and hybrid NN/HMM Training

7.4 Topology and Blank

7.5 CTC Training of MLPs

7.6 Framewise vs CTC Training

7.7 Interaction between CTC Training and the Blank Symbol

7.7.1 Peaks

7.7.2 Trying to avoid the Peaks of Predictions

7.7.3 The advantages of prediction peaks

7.8 CTC Training without Blanks

7.9 The Role of the Blank Symbol

7.10 Conclusion

8 Experimental Results, Combinations and Discussion

8.1 Introduction

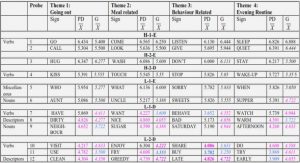

8.2 Summary of Results on Rimes and IAM Databases

8.2.1 MLP/HMM Results

8.2.2 RNN/HMM Results

8.2.3 Comparison of MLP/HMM and RNN/HMM Results

8.2.4 Combination of the Proposed Systems

8.3 The Handwritten Text Recognition tranScriptorium (HTRtS) Challenge

8.3.1 Presentation of the HTRtS Evaluation and of the Experimental Setup

8.3.2 Systems Submitted to the Restricted Track

8.3.3 Systems Submitted to the Unrestricted Track

8.3.4 Post-Evaluation Improvements

8.4 Conclusion

Conclusions and Perspectives

List of Publications

Appendices

A Databases

A.1 IAM

A.2 Rimes (ICDAR 2011 setup)

A.3 Bentham (HTRtS 2014 setup)

B Résumé Long

B.1 Système de Base

B.2 Systèmes Hybrides Perceptrons Multi-Couches Profonds / MMC

B.3 Systèmes Hybrides Réseaux de Neurones Récurrents Profonds / MMC

B.4 Une Comparaison Expérimentale de l’Entrai-nement CTC et au Niveau Trame

B.5 Combinaisons et Résultats Finaux

B.6 Conclusions et Perspectives

Bibliography