Get Complete Project Material File(s) Now! »

Ethical decision-making

In more common applications an instance of decision making tries to emulate reasoning based on objective quantifiable concepts that are connected by logical relationships. Every bit of care is taken for ASs in general to adhere to strict limitations on performance, comfort, safety and so forth. However, as the number of applications where robots are employed without supervision increases, they get closer and closer to situations in which not only economical and scientific factors are important, but also certain moral features3 the consequences of an automated system’s actions should be another parameter in its decision-making (Arkin, Ulam, and Wagner, 2012; Allen et al., 2000). The automated vehicle is an emblematic case, since controlling a one thousand to two thousand kilogram vehicle at 40km=h with unprotected humans around clearly demands an evaluation of the risk that actions might pose to multiple parties. Many other such morally salient cases exist and will continue to crop up in the future, such as the helper robot which will interact with the elderly, or more topically, the level of independence of « autonomous » weapons. As the point of depart of the discussion about artificial moral agents, the three main domains of normative ethics will be discussed to then detail some of the implementations of artificial moral agents proposed throughout the years.

Artificial moral agents

Artificial moral agents (AMA) is a qualifier that represents every application that tries to implement some sort of ethical reasoning. This goes from simple applications, like the ones proposed in (McLaren, 2003) and (Anderson, Anderson, and Armen, 2005) that are basically programs have ethical recommendations as an output, to (Thornton et al., 2017) and (de Moura et al., 2020) who propose ethical components close to real robotic applications. It should also be pointed out that in most cases, the aim of these approaches is to approximate human moral reasoning, able to consider a universal set of problems, using some form of generic formalism to translate the world into comprehensible data.

Another choice is to focus on specific applications, which is the case of the methods proposed in the next chapters, where the entire ethical deliberation process is designed to solve problems having a specific format and constant properties. Therefore, the question is not whether the agent is morally good or bad, since the approach itself does not aim to simulate moral agency.

Instead, what is important is that the artificial moral agent is capable of making ethical choices which align or coincide with what a human moral agent might decide in a similar context, using the same tools and available information. This argumentation is what motivates the use of the word automated instead of autonomous. Both cases, AMA for generic and specific cases, would be classified as explicit ethical agents using the classification proposed by (Moor, 2006), given that the ethical deliberation are coded into their systems, for more generic that they may be.

The role that uncertainty in information acquisition and processing has within any ethical deliberation (Anderson and Anderson, 2007) must also not be forgotten, because it can directly impact the end decision or invalidate the entire reasoning method. This is one of the main difficulties of the implementation of AMAs, since uncertainty is always present in measurement and most of the time it is not easy to estimate. Most of the works in the domain assume that the information received from other parts of the system is exact, avoiding this concern.

The three domains of normative ethics are viable options to be implemented into an AMA. Deontological and consequentialist approaches are ever present (Gips, 1995; Allen et al., 2000) with the Kantian categorical imperative and the utilitarian maximization principle respectively most of the time. Virtue based methods are less popular, and considering that an AMA does not have agency, an ethical reasoning method based on this approach could be proposed as having some sort of variables that represent virtues that might evolve through time, according to the success, failure and observed consequences of its actions. Another way to implement a virtue-based approach is to use the role morality concept (as is done in (Thornton et al., 2017)). According to (Gips, 1995), virtue-based systems are usually translated as deontological approaches for an application. The other two are the focus of much of the published works on the area, as we will explain shortly.

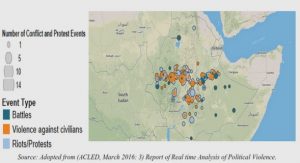

AMAs can be employed in a wide range of applications, from counseling a human’s ethical decision to deciding whether a lethal action from a drone is ethically permissible. In the automated vehicle domain the ethical component is most of the time connected to the safety claim of the road users, meaning that there is always a risk of death involved. Therefore the domain is posed to be the first one that presents to laypeople an application that can present ethical behavior in some situations, given that it was already established that braking and relinquishing control from the AV to the driver is not always the best option (Lin, 2016).

Game theory

Game Theory assembles analytical tools that may be used to model a situation when an agent’s best action depends on its expectations from the actions executed by other agents, and vice-versa (Ross, 2001). Its first foundations, in the economics context, were established in (Von Neumann and Morgenstern, 1966), with the following definition: They8 have their origin in the attempts to find an exact description of the endeavor of the individual to obtain a maximum of utility, or, in the case of the entrepreneur, a maximum of profit. In this definition three main entities can be identified:

• Individual: it represents the agent, the decision-maker that interacts with others.

• Utility: quantify the agent’s preference towards some object or event, expressing these preferences into all possible choices of action.

• « Maximum of »: what the individual wants from the interaction; corresponds to some deliberation method to determine the action with the most utility.

The concept of utility came about as a quantity that represented subjectively some form of fulfillment for the agent, thus its main objective is to maximize it. The relationship between choices and utility that emanates from them is represented by the utility function. It allows the mathematical formalization for the maximization operation that is executed by every agent. Two forms of utility function exists, an ordinal9 form, which express a preference order without a particular meaning for the magnitude of these utilities, and a cardinal one, where magnitude matters (Ross, 2001), such that the utility measures directly the desired property. One example of a cardinal utility functions is when such function represents a currency.

The definition of a game is the realization of some situation when an agent can only maximize some utility if it anticipates the behavior of other agents. Such abstractions allows different situations to be represented and analyzed by the same set of tools. Examples of different backgrounds that may use a game formulation are the economical domain, to study social interactions and even in robotic implementations. As for the anticipation towards other agents decision, it is based on the rationality model assumed to explain the behavior of the other agents. In normal life, rational behavior means that an agent look for the best mean to achieve a goal (Harsanyi, 1976).

AV’s Architecture

Before talking about the MDP implementation for an AV decision-making, it is necessary to discuss the architecture used in automated vehicles, which appear after the subsumption architecture, cited in chapter 1, reached its « capacity ceiling ». After implementing an airplane controller using a subsumption architecture, Hartley and Pipitone, 1991 noted that one flaw in such organization is the lack of modularity, since each behavior layer interferes with the one below only in terms of suppressing it or allowing it to change the output of the controller. This means that complexity scales fast according to the number of layers and changes in one layer needs to be met with changes in all superior layers.

Since the beginnings of the reactive approach other architectures were proposed, similar to the subsumption although having key differences with respect to it. One common point in these architectures was the presence of three layers, one containing no internal state, another containing information about the past and one last with predictions about the future, according to Gat, 1998. Some examples of such organization are the AuRa robot (Arkin, 1990), the task control architecture, notably used in (Simmons et al., 1997) and in many robots designed for NASA (Murphy, 2000) and the 3-tiered (3T) architecture (Gat, 1991). Every single one of these, since there are modified the ideas put forth by subsumption to be more modular and to allow planning, are classified as belonging to the hybrid paradigm (Murphy, 2000).

The three layers mentioned earlier and present in many of the hybrid approach architec-tures can be divided in (using the nomenclature presented in Gat, 1998):

• Controller: It is the layer that communicates with the actuators representing the low-level algorithms. Usually it does not have any internal states, it deals with the execution of some behavior passed from higher layers.

• Sequencer: Its role is to choose which behavior the controller must execute, while receiving information about the general planning from the upper layer.

• Deliberator: Executes the time consuming strategic planning tasks.

As it can be seen in figure 3.1 the relationship between layers are modified to input and output, differently from the suppression relation employed in the reactive paradigm. This structure is curious because it is outdated by the three levels of control during a driver task, presented in Michon, 1979: strategic level, tactical level and operational level (apparently there are no direct connection between the these works). The highest level deals with the global planning of the mission, the route determination, traffic level evaluation during the mission and possibly the risks involved in the chosen route. The tactical level deals with local constraints, adapting the route to interactions with other road users, while the operational level deals with the execution of the trajectory that possibly was modified by the previous layer. The same organization later appeared in Donges, 1999, with a different nomenclature (navigation, guidance, stabilization).

Vulnerability constant

A plethora of studies exist in accidentology addressing collisions between vehicles and pedestrians or only between vehicles. The role of vulnerability constant cvul is to represent the inherent physical vulnerability of each road user during a collision using the information captured by these statistical studies in a way to complete the information given by the velocity variation described in subsection 4.2.1. Lets consider the following scenario: a frontal collision between two vehicles with a Dv equal to 30km=h and another between a vehicle and a pedestrian with the same Dv. Lets first disregard the cvul and define the harm as only being the variation of velocity of a road user due to a collision. According to equation 4.1 the harm for each vehicle (named l and k, with l~vi = (20;0) and k~vi = ( 10;0) and equal mass) would be the norm of the difference between the final velocity, which is the mass weighted sum of their velocities (equation 4.8), and their initial velocity, at this moment not using cvul. This value, expressed by equations 4.6 and 4.7 needs to be compared with the same situation happened with the vehicle l but this time with a pedestrian p.

Table of contents :

1 Introduction

1.1 Automated driving vehicles in society

1.1.1 A note on terminology

1.1.2 History

1.1.3 Current research status

1.2 Motivation of the thesis

1.3 Contributions of the thesis

1.4 Structure of the document

2 State of the Art

2.1 Decision Making

2.1.1 Related works

2.2 Ethical decision-making

2.2.1 Normative Ethics

2.2.2 Artificial moral agents

2.3 Behavior prediction

2.3.1 Vehicle prediction

2.3.2 Pedestrian prediction

2.4 Game theory

2.4.1 Related works

2.5 Conclusion

3 MDP for decision making

3.1 Theoretical background

3.1.1 AV’s Architecture

3.1.2 Markov Decision Process

3.2 AV decision-making model

3.2.1 State and action sets

3.2.2 Transition function

3.2.3 Reward function

3.3 Results and discussion

3.3.1 Value iteration

3.3.2 Simulation

3.3.3 MDP policy results

3.4 Conclusion

4 Dilemma Deliberation

4.1 Dilemma situations

4.2 The definition of harm

4.2.1 Dv for collision

4.2.2 Vulnerability constant

4.3 Ethical deliberation process

4.3.1 Ethical Valence Theory

4.3.2 Ethical Optimization

4.3.3 Value iteration for dilemma scenarios

4.4 Results and discussion

4.4.1 Using EVT as ethical deliberation

4.4.2 Using the Ethical Optimization profiles to deliberate

4.5 Conclusion

5 Road User Intent Prediction

5.1 Broadening the scope

5.2 Intent estimation

5.2.1 Simulated Environment

5.2.2 Agent’s decision model

5.2.3 Estimating other agents’ intent

5.3 Incomplete game model

5.3.1 Nash equilibrium

5.3.2 Harsanyi’s Bayesian game

5.3.3 Decision making procedure

5.4 Simulation results

5.4.1 Multi-agent simulation

5.5 Final remarks

6 Conclusion

6.1 Final remarks

6.2 Future research perspectives

A Simulation set-up

A.1 Webots simulation structure

A.2 Controlling a car-like vehicle

A.3 Controlling the pedestrian

A.4 Necessary modifications

Bibliography