Get Complete Project Material File(s) Now! »

Related Work on Interactive Extracting Knowledge from Texts

Regarding the topic of supporting domain experts in extracting knowledge from texts, several researchers [Cimiano and Völker, 2005,Aussenac-Gilles et al., 2008,Szulman et al., 2010,Tissaoui et al., 2011] have been interested in keeping the traceability and the co-evolution of the knowledge model and the source of knowledge in texts.

Text2Onto, one of the pioneer frameworks for ontology learning from texts, was presented by Cimiano et al. [Cimiano and Völker, 2005, Maedche and Staab, 2001, Maedche and Staab, 2000a, Maedche and Staab, 2000b]. One of the advantages of Text2Onto is, it provides the traceability between the knowledge model and the source of knowledge in texts to help domain experts in understanding why concepts are introduced in the ontology. In order to keep the traceability, Text2Onto links objects in the ontology and terms in texts by storing a pointer for each object in the ontology to terms in texts. Another advantage of Text2Onto is, thanks to the link of objects in the ontology and terms in texts, Text2Onto is able to avoid processing the whole corpus from scratch each time it changes, only updating the corresponding parts of the ontology, i.e. the source of knowledge and the ontology co-evolve. However, Text2Onto only works with atomic concepts since in Text2Onto, concepts have no formal definitions, objects are only linked with terms.

Terminae [Aussenac-Gilles et al., 2008], a platform for ontology engineering from texts, sup-ported domain experts in evaluating and adding knowledge. Similar to Text2Onto, Terminae provides domain experts with the traceability between the ontology and the source of knowledge in texts. When domain experts build or maintain the knowledge model of the ontology, they could get terms for explaining why concepts are introduced in the ontology. In Terminae, the traceability is achieved through the links that connect terms in texts and concepts in the ontology. Several terms in texts could link to a concept in the ontology and domain experts could define or edit terms of concepts through a terminological form, then come back to modify the knowledge model. The advantage of Terminae is, concepts in the ontology are able to link to more than one terms in texts and have definitions from domain experts. However, the disadvantage of Terminae is, Terminae only works with atomic concepts since in Terminae, concepts have no formal definitions, they are defined by terms found in texts or natural lan-guage definitions that added by domain experts. Moreover, in Terminae, the knowledge model of the ontology and the source of knowledge do not co-evolve, domain experts have to do the task of modifying the knowledge model after they edit or add some knowledge to the source of knowledge. Then, there is a need for taking into account both atomic and defined concepts, and keeping the co-evolution of the source of knowledge and the knowledge model of the ontology to complete Terminae.

In the line of keeping the traceability between the knowledge model and the source of knowl-edge in texts with Text2Onto and Terminae, Dafoe, a platform for building ontologies from texts, was introduced by Szulman et al. [Szulman et al., 2010]. Szulman et al. [Szulman et al., 2010] claimed that textual data cannot be mapped directly into an ontology, such as in Text2Onto and Terminae. Dafoe uses a data model for transforming texts into ontologies which is composed of four layers: corpora layer for the original texts, terminological layer for the terms extracted from the texts, termino-conceptual layer for the relations of terms and con-cepts, and ontology layer for the ontology. The advantage of Dafoe is, by adding intermediate layers for formalizing the source of knowledge in texts, it allows concepts in an ontology can be independent of linguistic information in texts. Through this work, we can see that formalizing the source of knowledge in texts is also important for the process of knowledge extraction from texts. For our purpose, we can apply formalizing the source of knowledge in texts to bridge the possible gap between a knowledge model resulting from a bottom-up approach and a knowledge model from a domain expert that we have seen in section 2.2.1. Then, the source of knowledge can be modified to get a better knowledge model with respect to domain experts’ requirements without touching the original texts.

Another work, EvOnto, was introduced by Tissaoui et al. [Tissaoui et al., 2011]. In EvOnto, semantic annotations are used to formalize the source of knowledge in texts. Semantic annota-tions are used to connect terms in texts and objects of concepts in an ontology. The advantage of EvOnto is, it manages to adapt the ontology when documents are added or withdrawn, and to keep the semantic annotations up-to-date when the ontology changes. In this system, do-main experts can make changes in the ontology. However, the disadvantage of this system is, the experts cannot make changes in the source of knowledge, i.e. semantic annotations, or add background knowledge. In addition, EvOnto only works with atomic concepts since semantic annotations in this system only connect terms and objects of concepts in the ontology.

Semantic Annotation Techniques

Named entity recognition (or information extraction approach) is the most common approach for (semi-)automatic semantic annotation [Bontcheva and Cunningham, 2011]. In named entity recognition, an entity refers to a real-world object such as “Francois Hollande, born 12 August 1954, a French politician, the current President of France, as well as Co-prince of Andorra, since 2012”7. A name (or mention) of an entity is a lexical expression referring to that entity in a text. For example, the entity above can be mentioned in a text by names such as “Francois Hollande” or “President Hollande”. Same types of entities are grouped into concepts such as person, country, or organization, etc.

In named entity recognition approach, the main tasks are [Bontcheva and Cunningham, 2011]:

• Named entity recognition: This task consists of identifying the names of named entities in texts and assigning concepts to them [Grishman and Sundheim, 1996].

• Co-reference resolution: This task deals with deciding if two terms in texts refer to the same entity by using the contextual information from texts and the knowledge from ontology.

• Relation extraction: This task deals with identifying relation between entities in texts.

The techniques for identifying named entities in texts are generally classified into two groups [Sarawagi, 2008, Bontcheva and Cunningham, 2011]:

• Rule-based systems (or pattern-based systems [Reeve and Han, 2005]).

• Machine-learning systems.

Rule-based systems are based on lexicons and handcrafted rules and/or an existing list of reference entity names for identifying named entities. In these systems, named entities are identified by matching texts with the rules and/or simply doing a search through the list of entity names. The rules are designed by language engineers. An example of such rules can be “[person], [office] of [organization]” for determining which person holds what office in what organization8. This rule can be used to recognize named entities of people, offices, organizations from texts such as “Vuk Draskovic, leader of the Serbian Renewal Movement”. Some well-known rule-based systems for named entity recognition include FASTUS [Appelt et al., 1995], LaSIE [Gaizauskas et al., 1995] and LaSIE II [Humphreys et al., 1998], etc. FASTUS uses handcrafted regular expression rules to identify entity names; LaSIE and LaSIE II use lists of reference entity names and grammar rules. The advantages of rule-based systems are, they are efficient for domains where there is a certain formalism in the construction of terminology and do not require training data. The disadvantages of these systems are, they require expertise in the knowledge about language and domain, which are expensive to create [Sarawagi, 2008,Bontcheva and Cunningham, 2011]. Moreover, it is difficult to build a list of entity names that covers all the vocabularies of a domain of discourse. Therefore, they are expensive to maintain and not transferable across domains. Consequently, researchers have shifted the focus towards machine-learning based approaches since they are introduced.

In a comparison with rules-based systems, the advantage of machine-learning systems is, they automatically identify named entities. However, they require at least some annotated training data. Depend on the training data, the approaches of machine-learning systems can be further divided into three sub-groups: supervised, semi-supervised (or weakly supervised) and unsupervised methods [Nadeau and Sekine, 2007].

In supervised learning methods, the training data is an annotated training corpus, and the systems use the training data as the input of an extraction model to recognize similar objects in the new data as a way to automatically induce rule-based systems. Supervised learning meth-ods for named entity recognition include using Support Vector Machines (SVM) ( [Isozaki and Kazawa, 2002, Ekbal and Bandyopadhyay, 2010]), Hidden Markov Models ( [Zhou and J.Su, 2004, Ponomareva et al., 2007]), Conditional Random Fields (CRF) ( [Kazama and Torisawa, 2007, Arnold et al., 2008]), Decision Trees ( [Finkel and Manning, 2009]), etc. The main dis-advantages of supervised learning methods are, they require a large annotated training corpus and heavily depend on the training data, which has to be created manually. In semi-supervised learning methods, the training data are both of annotated training corpus and unannotated training corpus with a small set of examples to reduce system’s dependence on the training data. The system firstly is trained with an initial set of examples to annotate the unannotated train-ing corpus. The resulting annotations are then used to augment the initial annotated training corpus. The process repeats for several iterations to refine the semantic annotations of the docu-ments. The disadvantage of these methods is, errors in the annotations can be propagated when the annotations are used for training the other. In unsupervised learning methods, the data is unannotated. Unsupervised learning methods use clustering techniques ( [Cimiano and Völker, 2005]) to group similar objects together. The disadvantages of machine-learning systems are, it can be noisy and may require re-annotation if semantic annotation requirements change after the training data has been annotated.

Encoding Semantic Annotations

Resource Description Format (RDF)9 is a common format for encoding semantic annotation and sufficient for basic purposes of lightweight ontologies [Kiryakov et al., 2003]. There are three common approaches to encode semantic annotations in texts [Bontcheva and Cunningham, 2011]:

• Adding metadata directly to the texts’ context (inline markup), with URIs pointing to the ontology.

• Adding metadata to the start/end of the texts.

• Storing metadata and texts in separate files and/or loaded within a semantic repository, metadata pointing to the texts.

In a comparison between the three approaches, the first approach make the retrieval faster when a system needs to retrieve where a concept or object is mentioned. In the first approach, metadata and texts are not independent and the system should have the authorization to modify the texts. The second approach encodes the fact that certain concepts or objects are mentioned in a particular text, but it is not able to retrieve where these concepts or objects occur in the text. The second approach requires data storage smaller than the first approach because it annotates only one of the mentions. In this approach, the system should have the authorization to modify the texts as well. In the third approach, metadata is independent of the texts. The choice of approach for encoding semantic annotations depends on if it is feasible to modify the text content.

Figure 2.7 shows an example of encoding semantic annotations. The first part of the figure shows a text, an abstract with ID 10071657 taken from PubMed10. The second part of the figure shows the encoding semantic annotations of the text in the first part that produced by SemRep [Rindflesch and Fiszman, 2003]. In this system, the texts and the metadata are stored separately.

Knowledge Extraction and Semantic Annotation

Through the review of the background knowledge and semantic annotation techniques, we realize that change management is still an issue that needs to be addressed in semantic annotation. Semantic annotations are tied to one or more ontologies. Thus, if an ontology changes, then one or more semantic annotations need to be updated [Bontcheva and Cunningham, 2011]. For example, if an ontology is updated by removing a concept or an object, then the question is, which semantic annotations should be updated? As we see in section 2.3.2, the current semantic annotation techniques face the difficulty if the semantic annotation requirements change. It may require re-annotation which is expensive and time-consuming. Semantic annotation thus shares the same need of keeping the co-evolution between the knowledge model and semantic annotations with knowledge extraction.

Semantic annotation can be noisy. Even if an annotation is correct, the annotation may be useless for building the ontology with respect to experts’ requirements. Euzenat [Euzenat, 2002] formalized semantic annotation as two mapping functions: indexing which mapping ontologies to a set of documents, and annotation which mapping a set of documents to ontologies, and claimed that the knowledge from the ontology makes explicit what is implicit in the annotations. Therefore, semantic annotation could be improved by the knowledge extraction process. For example, if a concept that groups the objects chicken and penguin and the attribute has wings in the knowledge model is not in accordance with the expert knowledge, then the set of annotations should be improved by removing or making invalid the corresponding annotations.

Another problem of semantic annotation is, taking into account both atomic and defined concepts is still challenging in semantic annotation as the current semantic annotation techniques mainly work with atomic concepts. Researchers [Buitelaar et al., 2009, Declerck and Lendvai, 2010] have tried to annotate non-atomic concepts. In 2009, Buitelaar et al. [Buitelaar et al., 2009] presented a model called LexInfo for annotating non-atomic concepts, in which additional linguistic information was associated with concept labels. They claimed that LexInfo could be used for annotating non-atomic concepts but they did not actually apply it for semantic annotation. Later, in 2010, Declerck and Lendvai [Declerck and Lendvai, 2010] proposed a proposal of merging the models from Terminae [Aussenac-Gilles et al., 2008] (see in section 2.2.4) and LexInfo [Buitelaar et al., 2009] for semantic annotation of texts. To deal with annotating non-atomic concepts, this model [Declerck and Lendvai, 2010] had three layers of description within the ontology, in which linguistic objects pointed to terms and terms pointed to concepts. This model was not actually applied for semantic annotation either. The limitation of these models [Buitelaar et al., 2009, Declerck and Lendvai, 2010] is, it’s not easy to build the linguistic information for all concepts. Then there is a need for keeping the traceability between the source of knowledge in texts and the knowledge model [Cimiano and Völker, 2005,Aussenac-Gilles et al., 2008, Szulman et al., 2010, Tissaoui et al., 2011] as we have seen in section 2.2.4.

To target the problems above, we unify knowledge extraction and semantic annotation into one single process to benefit both knowledge extraction and semantic annotation. First, in order to bridge the possible gap between a knowledge model from a bottom-up approach and a knowl-edge model from a domain experts in the knowledge extraction process without touching the original texts, semantic annotations are used to formalize the source of knowledge in texts. In addition, semantic annotations helps the knowledge extraction process in providing the traceabil-ity between the knowledge model and the source of knowledge in texts. In a return, knowledge extraction helps in efficiently updating and improving semantic annotations by keeping the link between each knowledge units in the knowledge model and semantic annotations. Moreover, when knowledge extraction and semantic annotation are in one single process and the link between each knowledge units in the knowledge model and semantic annotations is preserved, if we can work with both atomic and defined concept in knowledge extraction, we are able to take into account both of them in semantic annotation as well. From this perspective, knowledge extraction and semantic annotation operate in a loop as depicted in Figure 2.8. In this way, semantic annotations and ontologies are two sides of the same resource and co-evolve.

Formal Concept Analysis

When we use the FCA method to build the concept hierarchy of an ontology, two sets of infor-mation are needed, a set of objects and a set of attributes. The set of objects is the relevant instances and the set of attributes are used to characterize the objects which are useful for our tasks or applications. These sets are used to build a formal context. This formal context is then used for building a concept lattice, which is considered as a knowledge model.

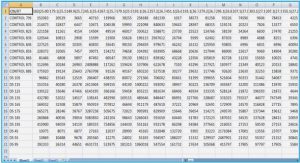

Definition 2.9 (Formal context). A formal context is a triple K = (G; M; I) where G denotes a set of objects, M a set of attributes, and I a binary relation defined on G M. I G M is a binary table which assigns an attribute to an object. We write gIm or (g; m) 2 I to mean that object g has attribute m. Example 2.1. Table 2.1 shows a formal context of animals. In this formal context, the objects are animals (g1: bear, g2: carp, g3: chicken, g4: crab, g5: dolphin, g6: honeybee, g7: penguin, g8: wallaby) and the attributes are properties describing the animals (m1: has_two_legs, m2: lays_eggs, m3: can_fly, m4: has_wings, m5: has_fins, m6: has_feathers, m7: has_milk, m8: has_backbone, m9: lives_in_water). A cross in a position ij of the table indicates that object i has attribute j. For example, the first row in the table indicates that a bear has two legs, has milk, and has backbone.

Table of contents :

Chapter 1 Introduction

1.1 Context and Motivation

1.2 Approaches and Contributions of the Thesis

1.3 Thesis Organization

Chapter 2 Knowledge Extraction and Semantic Annotation of Texts

2.1 Introduction

2.2 Knowledge Extraction

2.2.1 Knowledge Extraction Process

2.2.2 Knowledge Extraction from Texts

2.2.3 Ontology

2.2.4 Related Work on Interactive Extracting Knowledge from Texts

2.3 Semantic Annotation

2.3.1 What is Semantic Annotation?

2.3.2 Semantic Annotation Techniques

2.3.3 Encoding Semantic Annotations

2.3.4 Knowledge Extraction and Semantic Annotation

2.3.5 Choice of Data Mining Method

2.4 Formal Concept Analysis

2.4.1 Basic Concepts of Lattice Theory

2.4.2 Formal Concept Analysis

2.4.3 Algorithms for Lattice Construction

2.4.4 Knowledge Extraction and Semantic Annotation with FCA

2.5 Related Work on Expert Interaction for Bridging Knowledge Gap

2.6 Summary

Chapter 3 KESAM: A Tool for Knowledge Extraction and Semantic Annotation Management

3.1 Introduction

3.2 The KESAM Methodology

3.2.1 The KESAM’s Features

3.2.2 The KESAM Process

3.3 Semantic Annotations for Formalizing the Source of Knowledge, Building Lattices and Providing the Traceability

3.3.1 Semantic Annotations for Formalizing the Source of Knowledge

3.3.2 Building Lattices and Providing the Traceability with Semantic Annotations

3.4 Expert Interaction for Evaluation and Refinement

3.4.1 Formulating the Changes

3.4.2 Implementing the Changes

3.4.3 Evolution of the Context and Annotations

3.5 The KESAM Implementation and Case Study

3.5.1 The KESAM User Interface and Usage Scenario

3.5.2 Case Study

3.6 Conclusion

Chapter 4 Formal Knowledge Structures and “Real-World”

4.1 Introduction

4.2 Preliminaries

4.2.1 Attribute Implication

4.2.2 Constrained Concept Lattices w.r.t. Attribute Dependencies

4.2.3 Projections

4.3 Projections for Generating Constrained Lattices

4.3.1 Discussion about Constrained Lattices

4.3.2 Projections for Constrained Lattices w.r.t. Dependencies between Attribute Sets .

4.3.3 Projections for Constrained Lattices w.r.t. Sets of Dependencies

4.3.4 The Framework for Generating Constrained Lattices

4.4 Related Work, Discussion and Conclusion

Chapter 5 Conclusion and Perspectives

5.1 Summary

5.2 Perspectives

Bibliography