Get Complete Project Material File(s) Now! »

Analysis: k-mer embeddings intrinsic evaluation

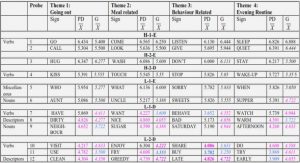

K-mer embeddings are trained in a self-supervised manner where the algorithm tries to predict the surrounding k-mers regarding the current one. The three main adjustable hyper parameters in these algorithms are the size of the embeddings (dimensionality complexity), the k-mer size (smaller or bigger pieces of DNA) and the window size (more or less surrounding words). It creates a large parameters space that influences severity points like the vocabulary size, the embeddings learning, the processing time and more globally the final representation. Increasing the value of k leads to an increase of the volume of the dictionary and the learning time inevitably becomes longer for any algorithm (Figure 3.5 and Table 3.2).

Analysis: Read embeddings intrinsic evaluation

Embeddings at the read level cannot benefit from analysis (correlation between Edit distance or Needleman-Wunsch score and k-mers embeddings) in section 3.3.1.2 because reads’ length are a lot bigger than k-mers. Nevertheless, as a genome catalog has been used to train the read embeddings, genomes can be projected in this new vector space. We would expect that species from the same genus or with a similar genetic material are more closely related to each other in the embedding space. We have thus set up two methods to quantify this phenomenon. One is to project and visualize genome embeddings using the t-SNE algorithm. Results on Figure 3.9 highlight that some clusters are formed of genomes from the same family. The other method is to compute a Mantel test and compare the correlation between two distance matrices of genomes. The first is the cosine similarity between genome embeddings, the second is the Mash distance which is a genome distance estimation using the MinHash algorithm between genome DNA4. A high value in the mantel test implies that cosine similarity of the embeddings is correlated with the mash distance of DNA, then it gives a good indicator on the relevance of the representation learnt by the model. Models are tuned and results are reported in Table 3.4.

Analysis: Read embeddings extrinsic evaluation on a read classification task

As shown in Figure 3.10 and in section 2.2.1, the datasets used in the experiments are composed of simulated metagenomes. The simulation allows to represent the NGS sequencing data while keeping the label of the reads allowing the training of supervised models. The evaluation of Read2Genome is done with both types of sequencing technology Illumina and Nanopore.

Given a read, we ensure that the Read2Genome model returns probabilities associated with the prediction that it belongs to a genome. In This way, we can set a threshold to reject uncertain classifications. As there is a extremely high number of sequences by metagenome (often greater than 10M), rejecting uncertain predictions improves the precision of the model without impacting clusters of reads. Liang et al. [Lia+20] also uses a reject threshold determined manually in DeepMicrobes. The metric for controlling the reject is the “rejection rate” calculated by dividing the number of rejected reads by the total number of reads.

We compare the results of FastDNA and Transformer+MLP models on Illumina datasets trained over 10 of the 235 species in the dataset (Only 10 species have been selected to allow rapid learning). As FastDNA obtains the best scores on 10 species, we trained the model on the whole 235 species with parameters recommended by Menegaux and Vert [MV19] which are set to 13 for k-mer size, 100 for embedding dimension and 30 for the number of epochs. We computed and plotted the accuracy, precision, recall, f1-score and rejected rate in accordance with the rejected threshold (see Figure 3.11). The threshold axis corresponds to the minimum probability of the class predicted by the model so that the read is not rejected. Metrics’ formulas are recalled below: Accuracy = TP+TN N , Precision = 1C CP i TPi TPi+FPi , Recall = 1C CP i = TPi TPi+FNi.

metagenome2vec: learning metagenome embeddings

The following step is to create metagenome embeddings using read embeddings or a set of reads embeddings. We propose to consider two different approaches in building metagenome embeddings: (i) the vectorial representation as baseline and (ii) the MIL representation as our reference method. The notations in the next sections are in accordance with those introduced in section 3.1.

metagenome2vec: Vectorial representation

Once a low-dimensional representation of the reads is available, all reads from a given metagenome are transformed into embeddings. In this representation, called M2V-VR, they are all summed together, resulting into a single instance embedding for one metagenome. A representation of metagenome can be computed as shown in the equation 3.1: (xm) = X s2xm !(s) ,m 2 M, ! : 8< : xm ! E s 7! !(s) (3.1).

With ! the Read2Vec transformation, xm the ensemble of reads in the metagenome m and E the dimension of the embeddings.

The vectorial or tabular representation is the one used by most ML algorithms. It relies on a more abstract representation than the multiple instances representation. Indeed, all the information of an entire metagenome, its millions of reads related to hundreds of different genomes, is summarized into a unique vector in the latent embeddings space.

In addition to the classification evaluation, we calculated and evaluated a clustering based on the embedding representation. For this clustering, m1 metagenomes are selected and m2 < m1 others are cut in 10 sub parts. Each metagenome, or part of the metagenome, is represented by one vector. An agglomerative clustering is trained on these embeddings to compute a cluster map and show visualize between clusters (Figure 3.13). Results show logically that embeddings from portions of the same metagenome are closer to each other. They also indicate that metagenomes of the same class are more likely to be found in the same cluster.

Table of contents :

Acknowledgment

Abstract

1 Introduction

1.1 Background and rationale

1.1.1 Context

1.1.2 Exploring microbial environments with metagenomics

1.1.3 Metagenomics in precision medicine

1.1.4 Overview of different sequencing technologies

1.1.5 Bioinformatics workflows to analyze metagenomic data

1.1.6 Classification models in metagenomics

1.2 Research problem and contributions

1.2.1 Objectives

1.2.2 Deep learning based approach and point of care

1.2.3 Building interepretable signatures based on Subgroup Discovery

1.2.4 Scientific mediation

2 Experimental methods and design

2.1 Survey of existing metagenomics datasets

2.2 Simulating metagenomic datasets

2.2.1 Datasets used to train embeddings and taxa classifier

2.2.2 Datasets to learn the disease prediction tasks

2.3 Introduction of the IDMPS database

2.4 Code implementation

2.4.1 State-of-the-art classifiers

2.4.2 End-to-end deep learning for disease classification from metagenomic data

2.4.3 Generate statistically credible subgroups for interpretable metagenomic signature

2.5 Conclusion

3 End-to-end deep learning for disease classification from metagenomic data

3.1 The representation of metagenomic data

3.2 State of the art

3.2.1 Machine learning models from nucleotide one hot encoding .

3.2.2 Machine learning models from DNA embeddings

3.2.3 Learning from multiple-instance representation of reads

3.3 Metagenome2Vec: a novel approach to learn metagenomes embeddings

3.3.1 kmer2vec: learning k-mers embeddings

3.3.2 read2vec: learning read embeddings

3.3.3 read2genome: reads classification

3.3.4 metagenome2vec: learning metagenome embeddings

3.4 Experiments and Results

3.4.1 Reference Methods compared to metagenome2Vec

3.4.2 Results of the Disease prediction tasks

3.5 Conclusion

4 Generate statistically credible subgroups for interpretable metagenomic signature

4.1 Introduction

4.1.1 Subgroup analysis in clinical research

4.1.2 Subgroup discovery: two cultures

4.1.3 Limits of current SD algorithms for clinical research

4.2 Q-Finder’s pipeline to increase credible findings generation

4.2.1 Basic definitions: patterns, predictive and prognostic rules .

4.2.2 Preprocessing and Candidate Subgroups generation in Q-Finder

4.2.3 Empirical credibility of subgroups

4.2.4 Q-Finder subgroups diversity and top-k selection

4.2.5 Possible addition of clinical expertise

4.2.6 Subgroups’ generalization credibility

4.2.7 Experiments and Results

4.3 Applications to metagenomics for phenotype status prediction .

4.3.1 Overview and concepts of the Q-Classifier

4.3.2 Statistical metrics and optimal union

4.3.3 Rejection and delegation concepts to adapt SD for prediction

4.3.4 Benchmark on real-world and simulated metagenomic data .

4.4 Conclusion

5 Conclusion and perspectives

5.1 Summary of contributions

5.2 Methodological assessment

5.3 Perspectives for future works

Bibliography

Figures list

A Appendix

A.1 Multiple instance learning

A.1.1 Beam search strategy using decision tree versus exhaustive algorithm

A.2 Comparision of Q-Classifier with or without cascaded combination of state-of-the-art classifiers