Get Complete Project Material File(s) Now! »

Unsupervised Deep Self-Organising Maps

In an unsupervised setting, the feature selection procedure is completely unsupervised, and the algorithm performs only the first step, a forward pass. In this forward pass, we construct a deep structure layer-wise, where each layer consists of the clusters rep-resentatives from the previous level. A natural question which arises is whether such an unsupervised feature selection can be beneficial for a prediction task. Although it is currently impossible to provide a theoretical foundation for it, there is an intuition why a deep unsupervised feature selection is expected to perform and performs better in prac-tice. Real data are always noisy, and a “good” clustering or dimensionality reduction can significantly reduce the noise. If features are tied into clusters of “high quality”, then it is easier to detect a signal from data, and the generalizing classification performance is higher. The hierarchical feature selection plays here a role of a filter, and a filter with multiple layers seems to perform better than a one-layer filter.

Supervised Deep Self-Organising Maps

The supervised deep SOM feature selection is based mostly on the forward-backward idea. Forward greedy feature selection algorithms are based on a greedily picking a feature at every step to significantly reduce a cost function. The idea is to progress aggressively at each iteration, and to get a model which is sparse. The major problem of this heuristic is that once a feature has been added, it cannot be removed, i.e. the forward pass can not correct mistakes done in earlier iterations. A solution to this problem would be a backward pass, which trains a full, not a sparse, model, and removes greedily features with the smallest impact on a cost function. The backward algorithm on its own is computationally quite expensive, since it starts with a full model [43]. We propose a hierarchical feature selection scheme with SOM which is drafted as Algorithm 2. The features in the backward step are drawn randomly.

Signatures of Metabolic Health

The biomedical problem of our interest is a real problem which is a binary classification of obese patients. The aim is to stratify patients in order to choose an efficient appro-priate personalized medical treatment. The task is motivated by a recent French study [46] of gene-environment interactions carried out to understand the development of obe-sity. It was reported that the gut microbial gene richness can influence the outcome of a dietary intervention. A quantitative metagenomic analysis stratified patients into two groups: group with low gene gut flora count (LGC) and high gene gut flora count (HGC) group. The LGC individuals have a higher insulin resistance and low-grade inflammation, and therefore the gene richness is strongly associated with obesity-driven diseases. The individuals from a low gene count group seemed to have an increased risk to develop obesity-related cardiometabolic risk compared to the patients from the high gene count group. It was shown [46] that a particular diet is able to increase the gene richness: an increase of genes was observed with the LGC patients after a 6-weeks energy-restricted diet. [19] conducted a similar study with Dutch individuals, and made a similar con-clusion: there is a hope that a diet can be used to induce a permanent change of gut flora, and that treatment should be phenotype-specific. There is therefore a need to go deeper into these biomedical results and to identify candidate biomarkers associated with cardiometabolic disease (CMD) risk factors and with different stages of CMD evolution.

Dimensionality reduction algorithms

In this study, we consider a number of dimensionality reduction approaches for feature visualization including both supervised and unsupervised learning algorithms. As stated in [81] and [88], manifold learning methods are a robust framework for reducing the dimension. The idea for these algorithms is to find a more compact space that is embedded in a higher dimensional space. Ingwer et al. presented Multidimensional scaling (MDS) that create a mapping from a high-dimensional space to a more compact space and try to preserve the distance between pairs of points while Locally Linear Embedding (LLE) and Isometric Mapping (Isomap) were considered as a new generation of dimensionality reduction algorithms and applied to synthetic and real data with great achievements. We recall some information on some of the prominent dimensionality reductions.

Linear Discriminant Analysis (LDA) [172, 171] is a supervised dimensionality reduction method that fits a Gaussian density to each class. This algorithm projects the input to the most discriminative directions to reduce the dimension. As described in [173], classical LDA puts the data into a lower-dimensional vector space with the maximum proportion between between-class distance and the within-class distance to ensure a maximum discrimination. Given a data A ∈ RN ×n with n is the number of sample (rows) and N is the number of features or dimensions (columns). Given l is the desired dimension (l < N ), a transformation G ∈ RN ×l where G : ai ∈ RN → bi = GT ai ∈ Rl aims to map each column ai of A (1≤ i ≤n) with N-dimensional space to a vector bi in l-lower-dimensional space. Suppose that samples in A is grouped into k categories: A = {Π1, Π2, Πk } where the ith class Πi consists of ni samples (with k n=1 ni = n). Two scatter matrices, namely within-class Sw = k x∈ΠI (x−mI )(x−mI ) T and between-class i=1 k T 1 Sb = i=1 ni(x − mi)(x − mi) , where mi = x∈ΠI x depicts the mean of the ith nI 1 k x reveals the global mean, aim to evaluate the quality category and m = i=1x∈ΠI n of the clusters. While trace(Sw ) estimates the closeness of the vectors within the classes, trace(Sb) represents the separation between classes. The within-class and between class matrices turn to SbL = GT SbG and SwL = GT Sw G in the low-dimensional space with the linear transformation G, respectively. To optimal G, we need to maximize trace(SL) and minimize trace(SwL) which leads to (Eq. III.1).

Abundance Bins for metagenomic synthetic images

In order to discretize abundances, choose a color for them, and construct synthetic images, we use different methods of binning (or discretization). On the artificial images, each bin is illustrated by a distinct color extracted from a color strip of heat map colormaps in Python library such as jet, viridis, etc. In [38], authors stated that viridis showed a good data, Right: Log-histogram (base 4)). (B): Log histogram of each dataset performance in terms of time and error. The binning method we used in the project is unsupervised binning which does not use the target (class) information. In this part, we use EQual Width binning (EQW) with ranging [Min, Max]. We test with k = 10 bins (for color distinct images, and gray images), width of intervals is w = 0 1, if Min=0 and Max = 1, for example.

Binning based on Quantile Transformation (QTF)

We test another approach to bin the data, based on a scaling factor which is learned in the training set and then applied to the test set. With different distributions of data, standardization is a commonly-used technique for numerous ML algorithms. Quantile TransFormation (QTF), a Non-Linear transformation, is considered as a strong prepro-cessing technique because of reducing the outliers effect. Values in new/unseen data (for example, test/validation set) which are lower or higher the fitted range will be set to the bounds of the output distribution. In the experiments, we use this transformation to fit the features’ signal to a uniform distribution.

We also illustrate another scaler, a linear scaler, MinMaxScaler (MMS), for a compar-ison between scaler algorithms. This algorithm scales each feature to a given range with the formulas (III.3) and (III.4): Xstd = X − X (III.3) X − X Xscaled = Xstd ∗ (max − min) + min (III.4).

QTF and MMS implementations are provided from the scikit-learn library [16] in Python. As shown in Table III.3, QTF transformation defeats MMS in most of cases. Compar-ing to EQW (in Table III.2), we see that MMS and EQW share a pattern in the results, but MMS outperforms EQW for FC model.

Table of contents :

Acknowledgements

Abstract

Résumé

I Introduction

I.1 Motivation

I.2 Brief Overview of Results

I.2.1 Chapter II: Heterogeneous Biomedical Signatures Extraction based on Self-Organising Maps

I.2.2 Chapter III: Visualization approaches for metagenomics

I.2.3 Chapter IV: Deep learning for metagenomics using embeddings

II Feature Selection for heterogeneous data

II.1 Introduction

II.2 Related work

II.3 Deep linear support vector machines

II.4 Self-Organising Maps for feature selection

II.4.1 Unsupervised Deep Self-Organising Maps

II.4.2 Supervised Deep Self-Organising Maps

II.5 Experiment

II.5.1 Signatures of Metabolic Health

II.5.2 Dataset description

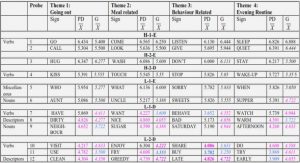

II.5.3 Comparison with State-of-the-art Methods

II.6 Closing and remarks

III Visualization Approaches for metagenomics

III.1 Introduction

III.2 Dimensionality reduction algorithms

III.3 Metagenomic data benchmarks

III.4 Met2Img approach

III.4.1 Abundance Bins for metagenomic synthetic images

III.4.1.1 Binning based on abundance distribution

III.4.1.2 Binning based on Quantile Transformation (QTF)

III.4.1.3 Binary Bins

III.4.2 Generation of artificial metagenomic images: Fill-up and Manifold learning algorithms

III.4.2.1 Fill-up

III.4.2.2 Visualization based on dimensionality reduction algorithms

III.4.3 Colormaps for images

III.5 Closing remarks

IV Deep Learning for Metagenomics

IV.1 Introduction

IV.2 Related work

IV.2.1 Machine learning for Metagenomics

IV.2.2 Convolutional Neural Networks

IV.2.2.1 AlexNet, ImageNet Classification with Deep Convolutional Neural Networks

IV.2.2.2 ZFNet, Visualizing and Understanding Convolutional Networks

IV.2.2.3 Inception Architecture

IV.2.2.4 GoogLeNet, Going Deeper with Convolutions

IV.2.2.5 VGGNet, very deep convolutional networks for large-scale image recognition

IV.2.2.6 ResNet, Deep Residual Learning for Image Recognition .

IV.3 Metagenomic data benchmarks

IV.4 CNN architectures and models used in the experiments

IV.4.1 Convolutional Neural Networks

IV.4.2 One-dimensional case

IV.4.3 Two-dimensional case

IV.4.4 Experimental Setup

IV.5 Results

IV.5.1 Comparing to the-state-of-the-art (MetAML)

IV.5.1.1 Execution time

IV.5.1.2 The results on 1D data

IV.5.1.3 The results on 2D data

IV.5.1.4 The explanations from LIME and Grad-CAM

IV.5.2 Comparing to shallow learning algorithms

IV.5.3 Applying Met2Img on Sokol’s lab data

IV.5.4 Applying Met2Img on selbal’s datasets

IV.5.5 The results with gene-families abundance

IV.5.5.1 Applying dimensionality reduction algorithms

IV.5.5.2 Comparing to standard machine learning methods

IV.6 Closing remarks

V Conclusion and Perspectives

V.1 Conclusion

V.2 Future Research Directions

Appendices

A The contributions of the thesis

B Taxonomies used in the example illustrated by Figure III.7

C Some other results on datasets in group A

List of Figures

List of Tables

Bibliography