Get Complete Project Material File(s) Now! »

Honeypot Classifications

Data control and data capture are essential paradigms for a safe honeypot operation [154]. Honeypot operators should know at any time what is happening to the honeypot and the monitoring system should have multiple layers in order to mitigate the failure of a layer [154]. McCarty [101] reported a case where an attacker switched off the honeypot’s monitoring features. This is a worst-case scenario for honeypot operators. If attackers were able to abuse the honeypot for performing further attacks, honeypot operators would be legally responsible for their systems and would actively participate in attacks. Therefore, it is essential to be able to classify honey-pots. When a honeypot operator encounters a new honeypot implementation and if the honeypot category is known, then a decision on whether to operate the honeypot or not is easier to take. Seifert et al. [147] classi-fied honeypots according to six classes: interaction level, data capture, containment, distribution appearance, communication interface, and role in a multi-tier architecture.

Interaction-level Honeypots usually expose some functionality to an attacker. In order to limit the attacker’s control, the exposed functionality is limited in some manner. From the point of view of interactions, hon-eypots are divided into low- and high-interaction honeypots [130], [147], [154]. The term mid-interaction honeypot is also sometimes used [130] to specify honeypots emulating services having more features than low-interaction honeypots. Multipot is a medium interaction honeypot designed for Windows platforms capable to emulate known vulnerabilities. Having received the shell code1 Multipot [77] tries to emulate it with the purpose of downloading the additional payload. The interaction between attackers with a compromised system is often modeled with attack-trees [145] where a node corresponds to a stage of an attack and the edges represent the logical connections between nodes.

Data capture Spitzner [154] and Cheswick et al. [27] advise the use of multiple monitoring and data-capture layers. Seifert et al. [147] propose the use of a honeypot’s data capture capabilities, which describe the type of data it is able to capture, as honeypot classificat ion criteria. They define four values for this category: events, attacks, intrusion, and none. When a honeypot collects data such as scanning activities, it collects events; when it collects data on a brute-force attack on an account, it collects attacks and when it collects data about an attacker who has penetrated the system, it collects information about intrusions.

Containment In most countries, honeypot operators are legally responsible for their systems [2]. Best practice shows that a firewall should be put in front of the honeypot in o rder to protect other organizations and to prevent it being actively involved in attacks on third parties [154]. Nicomette et al. [112] configured a firewall in front a honeypot such that attackers could not rea ch third parties through the honeypot. Such firewall configurations with additional intrusion detectio n systems are called honeywalls [25]. Having penetrated a system, attackers often want to download tools from the Internet [112], [154]. If the firewall blocks all connections from the honeypot to the Internet, the honeypot is unattractive to attackers and attacks can only be partially observed [112], [154]. Spitzner [154] proposes limiting the number of Internet connections from the honeypot. This allows attackers to download their tools but not to perform destructive attacks [154]. Alata et al. [2] propose simulating external hosts for the honeypot by using dynamic connection redirection mechanisms. Seifert et al. [147] defined these mitigation techniques as containment. They identified four containment techniques. Firstly, a ho neypot can block actions of attackers. Secondly, a honeypot could defuse attacker actions. In this case, the attacker can connect to the target, but the content of the connection is tampered with so as to remove dangerous payloads. Thirdly, a honeypot can also slowdown an attacker. An example is to artificially slow down connections related to attacks. Finally, a honeypot can block all actions by attackers.

Distribution appearance Seifert et al. [147] introduced the distribution appearance class as a classification criterion. Honeypots can be stand-alone or distributed systems. A stand-alone honeypot only interacts with the attacker and his environment, while a distributed honeypot interacts both with the attacker and with additional entities. Examples are automated log file an alysis or attacker tracking programs.

Communication interface Seifert et al. [147] also uses the communication interface to classify honeypots. The communication interface defines the means by which the in formation about attackers can be col-lected. For instance, network traffic can be collected or system logs can be recovered, via an Application Programming Interface (API).

Role in multi-tier architecture Seifert et al. [147] describe server-side honeypots. Such a honeypot passively waits for connections from attackers and waits to be exploited. He also identified client honeypots, which try to mimic a user whose machine gets compromised when he or she visits a malicious web server. This thesis mainly focuses on server-side honeypots.

The voluntary interaction with attackers is the riskiest part of honeypot operation [192]. When a new hon-eypot design is published, it is usually classified accordin g its interaction level. Low-interaction honeypots have often a lower software complexity than high-interaction honeypots and hence are easier to manage. Hon-eypots are divided into research and production honeypots: production honeypots are systems for detecting the presence of attackers, whereas research honeypots are used to study attacks [154].

High-Interaction Honeypots

Cheswick [26] mentions throwaway machines having real security holes with the sole purpose of being attacked a long time before the honeypot movement. These services can be compromised by attackers and their behavior can be studied. He also discusses jails created with the chroot command. This command permits a different system root to be set for a process. The new root may be a subdirectory of the overall file system and a program running in this jail can only access the files in this subdirec tory and its descendants. He concludes that such a jail is not perfectly secure and not entirely invisible. He advised using throwaway machines with real security holes that are externally monitored by a second machine. He proposes capturing all the network traffic and thus observes an attacker’s activity.

This was a valid solution for its time, because attackers connected to the service using clear-text protocols for remote access. Hence, their activities could be monitored. However by the mid-nineties, encryption was gaining popularity. Most telnet services [126] were replaced with SSH [196] and a significant fraction of communications became encrypted. In 2000, Spitzner [154] called these throwaway machines high-interaction honeypots. In order to overcome the problem of monitoring encrypted network traffic, data capture should be performed on the honeypot itself. Spitzner [154] proposes the use of a modified command line shell to monitor an attacker’s activity [154]. He also defended the idea that no information about attackers should be locally stored on a honeypot: if information is locally stored, an attacker could tamper with or delete the recorded information. Attackers often installed their own command line interpreters on honeypots [9].

This prevents an attacker from being monitored by the honeypot’s shell. To counteract this trend, the monitoring features moved into kernel space. Balas et al. [9] implemented Sebek a Linux kernel module for monitoring an attacker’s keystrokes and related file accesses. Sebek uses the rootkit technology initially developed by attackers who wished to hide their presence on compromised machines. It detours the read system call, and so is able to capture the content of opened files and the standard input stream. Hence, even encrypted communications can be captured because the monitoring happens after the decryption. Moreover, Sebek transmits the acquired data over a network to a server that is unlikely to be under the control of an attacker. A little bit later McCarty [101] published an article in which he describes techniques to detect these addi-tional monitoring features. Attackers inspect the addresses of the system calls.

When a function is detoured its address appears in an unexpected address range. McCarty [101] also describes techniques that attackers could use to switch off the monitoring features. Dunlap et al. [45] argue that the kernel monitoring approaches depend on the integrity of the operating system and assume that the operating system is trustworthy. As proved by McCarty, this is not always the case. Hence, Dunlap et al. [45] suggest performing the monitoring at virtual machine level. This approach implies that the honeypot is operated in a virtual machine. A virtual machine is a program that emulates a complete operating system and is run under another operating system. A popular open-source virtual machine is a Linux or Windows operating system that uses the emulator Qemu [18]. Por-tokalidis et al. [124] extended Qemu with additional monitoring features in order to detect zero-day exploits. These features make it suitable for the operation of honeypots. The authors developed a generic method to de-tect stack smashing, heap corruption and format string attacks.

The key idea is to detect them at the CPU level. Qemu is a virtual machine allowing each executed instruction to be monitored and controlled. Something that is hardly possible with a kernel level approach. The honeypot of Portokalidis et al. [124] generates signatures when an attack is encountered. A honeypot operator can see the exploit when it happens but is still not able to track all the regular commands that an attacker has entered. Hence Xuxian et al. [194] extended Qemu such that the executed programs be recovered. With such an approach, a honeypot operator is able to determine the actions of an attacker following a successful exploit. Qemu translates each instruction in user space resulting a high performance overhead comparing to the hardware-assisted virtual machines.

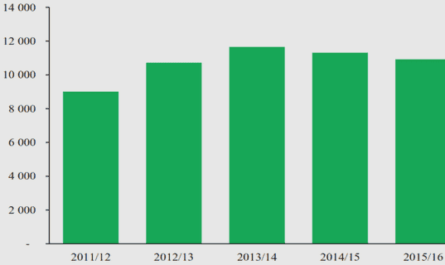

As previously discussed, with low-interaction honeypots attackers can only perform a limited set of inter-actions with the honeypot. This limitation helps the operator to control the honeypot. Consequently, this model scales better than the model of high-interaction honeypots. Hence, low-interaction honeypots are better suited for assessing attacks and for collecting a significant amoun t of data [112], [167]. However, low-interaction hon-eypots are not suitable if the intend is to study attacker’s behavior within a system due to the limited amount of exposed features. While had Cheswick [26] discussed throwaway machines with real security holes in the early 90s and Bellovin had implemented pseudo services it was not until 2000 that evolve quickly. Figure 3.3 shows the evolution of the numbers of scientific publicat ions about low- and high-interaction honeypots. A detailed description about the experiment is presented in appendix B. Publications in 2000 and 2001 dealt only with high-interaction honeypots, describing the experiments made by the authors did with high-interaction honeypots, explaining what the attacker did.

The deception toolkit had been available since 1998. In 2003 first implementations of Honeyd appeared. Gupta [113] discussed the effectiveness of both tools. He pinpointed their limits and proposed an iterative approach to the operation of honeypots because the attackers’ tactics and strategies are unknown. In 2006 virtual machines gained popularity for the operation of honeypots. The advan-tage of virtual machines is that they can be set up easily and can be reset to a clean state without reinstalling a machine from scratch. This process can even be partially automated.

Honeypot Research Activities

This powerful idea of honeypots, leads to numerous research activities. The major trends of research activities are grouped in this section.

Attacker Observation and Information Gathering

The basic idea of honeypots is to provide a counterfeit infrastructure in a dangerous environment. This in-frastructure is closely monitored. When, the infrastructure is attacked, intruders’ behavior is studied and in-formation is gathered from the attackers. Low-interaction honeypots only provide a limited set of features for attackers, but they are quite useful for detecting attacks in networks. A connection to Honeyd discloses the source IP address of an attacker. The requested service is also revealed. An attacker can also connect to a high-interaction honeypot. Portokalidis et. al. [124] proposed Argos a customized virtual machine to detect zero day exploits. The high-interaction honeypot community is also interested in post-exploit activities. Most research efforts have focused on improving the monitoring capabilities of high-interaction honeypots. Alata et al. [3] modified the TTY driver installed on the honeypot whic h enabled them to mirror the attacker’s terminal and gather key-strokes dynamics. Moreover, they track system calls to the kernel for the case attackers bypass a terminal.

Attackers often switch of the terminal’s echo and blindly type commands. Xuxian et al. record only processes that are executed [194]. Alata et al. [3] introduced an additional system call that is used within their exposed program to differentiate system calls related to attackers and the system itself. Xuxian et al. [194] simply trap all the system calls. Zuge et al. [199] use high-interaction honeypots to collect self-propagating malicious software. The authors compared the amount of data they collected with their high-interaction honey-pot with the programs they collected from the low-interaction honeypot Nepenthes running the two experiments the same time. They collected more unique malware samples with their high-interaction honeypot because Ne-penthes only emulates a subset of vulnerabilities.

The authors used the TCP stream reassembly engine snort from the open source intrusion detection system and looked for Portable Executable (PE) files in the network streams tapped from the honeypot. This approach is used to collect malicious software targeted at Microsoft Windows operating systems. In addition, they monitored the file system of the virtual machine that is operating the honeypot. If a new malicious program is installed, it is automatically copied. The file system is periodi-cally remotely imaged and all the files are traversed. Each fil e is compared with a list of initial files installed on the honeypot. If a new file appears, a new malicious program has been detected. The advantage of this approach compared to those used by Nepenthes, is that samples that use unknown malicious programs can be collected. Hence, low-interaction and high-interaction honeypots enable to collect programs related to attack-ers. However, the current honeypots do not reveal any information about the usefulness of these programs from the attacker’s points of view. For instance, a tool to unpack commonly used archives has a lower value to an attacker, than a database program including stolen information.

Honeypot Management

The operation of a high-interaction honeypot often involves costs. The major challenge is to handle arbitrary code execution by attackers. Honeypots may crash and need to be restarted, or additional firewall rules must be configured. The reason for this partially retroactive met hod is that the attackers’ actions and objectives are often unknown. The operation of a honeypot is an iterative process [113]. Initially assumptions are made about attackers’ behaviors. After a while, attackers violate these assumptions. The honeypot operator has to adjust the honeypot according to new assumptions.

Further important considerations are the scalability and network visibility. A honeypot that is operated on only one public IP address has a lower visibility than a collection of honeypots. Honeypot operation is subject to legal restrictions. If the honeypot gets involved in further attacks that involve third parties, the honeypot operator is legally responsible [2]. Hence, Spitzner [154] and Balas et al. [10] propose letting attackers in, but using intrusion detection systems and customized firewalls to prevent them from attacking third parties. Chamales [25] calls these firewalls honeywalls. Portokalidis et al. [125] and Jianwei et al. [199], claim that honeywalls are perfectly suited to high-interaction honeypots that aim only to collect the first program that is acquired by attackers. In ca se an attack exploits the services and pushes the shellcode, the honeypot operator has already achieved his goal. However, if the objective is to study attacker behaviors after the break-in, providing a honeywall is not really effective. Attackers usually try to connect to the Internet after they have compromised a system [2], [3], [181].

When they fail, they usually give up and leave the honeypot. Alata et al. [2] proposed a customized Linux firewa ll that can dynamically redirects connections or simulate or drop them. They give an example in which an attacker scanned an entire network. In this case, the customized kernel forged connections to make an attacker believe that Internet connectivity was available. In the scanning example, only SYN/ACK [156] or RST TCP packets are forged. However, if an attacker connects to a pseudo-service, a content has to be returned. In essence, this is the same problem faced by low-interaction honeypots.

The risk of not attacking third parties can be reduced by using a number of different strategies. Xuxian et al. [193] proposed a hybrid honeypot solution. The low-interaction honeypot Honeyd is installed at different locations on different IP addresses forwarding the traffic to a centralized cluster of high-interaction honeypots. This centralized approach helps to handle failures. On the client side, code complexity is reduced, resulting in a low failure rate. However, the operational risk of the centralized high-interaction honeypot remains, and a trust relationship has to be established among the entities operating the low-interaction honeypots and the central-ized high-interaction honeypots. Vrable et al. [173] address the scalability issues of high-interaction honeypots. They claim that most high-interaction honeypots waste CPU cycles during the long periods when they are wait-ing for attackers. The authors exploit these cycles using a distributed architecture of high-interaction honeypots to emulate on-demand a large fleet of high-interaction honey pots while using minimal physical resources. Most of the proposed concepts to mitigate the damage resulting from honeypots are static solutions based on fixed assumptions. However, honeypots themselves do not address self-management aspects such that they assess the benefits and losses during operation.

Distributed Honeypot Operation

Setting up of a honeypot on a public IPv4 address permits an observation of only 2132 of the address space4 . A Linux kernel can be configured to have more than one IP addres s per network interface [127]. However, it is recommended that no more than 16 addresses should be used in order to avoid instability [94]. If a larger address space is to be monitored, more physical interfaces are needed. This limitation can be overcome by using Honeyd. In this case the kernel TCP/IP stack is not used, but is emulated in user space. Honeyd enables entire subnetworks to be emulated. However, no organization can emulate all free IP addresses. Firstly, an organization can use only IP addresses that they own. Several organizations such as [141] manage the address allocation. Secondly, the networks need to be properly routed in order to guarantee the stability of the Internet. In this case, only owned networks can be monitored, and no meaningful assumptions about the neighboring networks can be made.

Hence, Dacier [38] described the setup of a distributed network of honeypots composed of a set of low-interaction honeypots. He created a project, Leurr´.com to which people could contribute by setting up a low-interaction honeypot. Information is centrally logged and each partner can access the common collected data. In 2008, the project comprised 50 different platforms in 30 countries. Initially (Leurr´.com V.1.0), Honeyd was deployed aiming to reduce operational risk. The EURECOM institute offered a Compact Disk with the necessary software to facilitate deployment. They also offered a centralized log collection facility and granted access to partners. In Leurr´ V.1.0., The authors focused mainly on the measurement of scanning activities, but subsequently the authors focused on collecting malicious programs with their low-interaction honeypots.

The Leurr´.com project evolved into an EU project called WOMBAT [39]. The authors used SGNET instead of honeyd. The authors collected malicious programs using feedback from dynamic malware analysis. Any pointers to other malicious programs found by the analysis were acquired. The authors of this project have three objectives: Data acquisition, data enrichment and threat analysis. The NoHa, a framework 6 EU project [88] is a similar European project to set up honeypots. In the intrusion detection community, the SurfIDS [160] is a distributed intrusion detection system project capable of identifying malicious traffic by means of shared information retrieved from other sensors. The purpose of these projects is to collaboratively share threat information and collect malicious data on a large scale, aiming to provide a better visibility and understanding of global malicious activities. An exhaustive list of distributed malicious data collection projects can be found in [99]. The advantage of distributed honeypots is that a higher visibility of malicious activities is reached than with a single honeypot. The higher visibility frequently results in a larger amount of data that is collected with such solutions. Hence, data aggregation techniques and an evaluation of data processing tools is mandatory for a meaningful interpretation of the data.

Multi-Agent Learning Founded on Game Theory

Markov decision processes (MDP) are frequently used to model an agent in a dynamic environment. An agent must learn a policy that maps states to actions by optimizing its reward signal. Often some parameters of the MDP, especially the probabilistic transition function, are unknown and a learning approach must be used. In some cases, the successive states should also be taken into account by an agent instead of just maximizing a reward signal. However, the previously discussed reinforcement learning techniques only consider an agent in an environment make the assumption that the environment is stationary. When there are more than two agents, a simplistic approach is to consider opponents as the environment [90]. However, an environment containing additional agents is constantly changing [74]. The environment may not be stationary anymore being is influenced by the other agents rather than generated by a stochastic process [74]. In this section we consider reinforcement learning where more than one agent is present, and the agents are opponents. The learning approaches should be feasible in close to real time. Stochastic games are introduced that handle the uncertainty of agents dealing with other agents better than traditional Markov decision processes.

Table of contents :

Introduction

2.1 Context

2.2 Problem Statement

2.3 Contributions

I State of the art

3 Honeypots

3.1 Honeypot Evolution

3.2 Honeypot Classifications

3.3 Honeypot Research Activities

3.3.1 Attacker Observation and Information Gathering

3.3.2 Honeypot Management

3.3.3 Distributed Honeypot Operation

3.3.4 Honeypot Data Analysis

3.4 Detecting Honeypots

3.5 Summary

3.6 Limitations

4 Learning in Games

4.1 Game Theory

4.2 Reinforcement Learning

4.2.1 Markov Decision Process

4.2.2 Learning Agents

4.3 Multi-Agent Learning Founded on Game Theory

4.4 Summary

II Contributions

5 Modeling Adaptive Honeypots

5.1 Modeling Attacker Behavior

5.1.1 Hierarchical Probabilistic Automaton

5.1.2 Attacker Responses

5.2 Honeypot Behaviors

5.3 Summary

6 Learning in Honeypot Games

6.1 Game Theory and High-Interaction Honeypots

6.1.1 Defining Payoffs

6.1.2 Computing Payoffs with Simulations

6.1.3 Leveraging Optimal Strategy Profiles

6.2 Learning Honeypots Operated by Reinforcement Learning

6.2.1 Environment

6.2.2 Honeypot Actions

6.2.3 Rewards

6.2.4 Learning Agents

6.3 Fast Concurrent Learning Honeypot

6.3.1 Attacker and Honeypot Rewards

6.3.2 Learning Honeypot and Attackers

6.4 Summary

6.5 Limitations

7 Honeypot Operation

7.1 Netflow Analysis

7.2 Network Activity Identification

7.3 Full Network Capture Analysis

7.3.1 Network Forensic Tool Analysis

7.4 User Mode Linux Tests

7.5 In vivo Malware Analysis

7.5.1 Tree- and Graph-based kernels

7.5.2 The Process Tree Model

7.5.3 The Process Graph Model

7.6 Implementation of Adaptive Honeypots

7.6.1 Adaptive Honeypot – Framework

7.6.2 Component Description

7.7 Conclusions

7.8 Limitations

8 Experimental Evaluations

8.1 Recovering High-Interaction Honeypot Traces

8.2 Recovering Low-Interaction Honeypot Traces

8.3 Computing Nash Equilibria

8.4 Reinforcement Learning Driven Honeypots

8.5 Honeypot Comparison

8.6 Fast Concurrent Learning

8.7 Conclusions

9 Conclusions and Perspectives

9.1 Summary of the thesis

9.2 Insights

9.3 Limitations

9.3.1 System Attacks

9.3.2 Behavioral attacks

9.4 Future Work

9.4.1 Alternative Honeypot Designs and Feature Extensions

9.4.2 Additional Honeypot – Attacker System Games

A Vulnerability Measurements

B Quantitative Publication Analysis

B.1 Trend Analysis

B.2 Publication Measurements

C Honeypot Operation

C.1 Forensic Tool Exploits

D Experimental Evaluations

D.1 Modification of the Linux Authentication Modules

D.2 Kernel Modifications

D.3 Message Exchange