Get Complete Project Material File(s) Now! »

Combination of different imaging modalities

Multimodality has been put forward in the reviews of AD classification (RA- THORE et al., 2017 ; FALAHATI, WESTMAN et SIMMONS, 2014 ; ARBABSHIRANI et al., 2017). As different imaging modalities correspond to various stages of the AD process, combining them could give a more complete overview of each individual. However, we did not find the impact of the use of multimodality to be significant. This result is not surprising, as the most combined modalities are MRI and FDG PET (19 out of 35 experiments using multimodality), and we showed that inclu-ding other features does not lead to a significant increase in performance compa-red to using FDG PET alone. In addition, the cost of collecting images of different modalities for each patient is not small, and should not be neglected when using such approaches.

Design of the decision support system and metho- dological issues

The lack of a test data set is observed in 7.3% of experiments. In 16% of articles using feature selection, it is performed on the whole data set, and 8% of articles do not describe this step well enough to draw conclusions. Other data leakage (use of the test set for decision making) is identified in 8% of experiments, and is unclear for 4%.

Overall, 26.5% of articles use the test set in the training process, to train the algorithm, choose the features or tune the parameters. This issue, and in particular performing feature selection on the whole data set, has also been pointed out by ARBABSHIRANI et al. (2017) in the context of brain disorder prediction.

Performance as a function of data set size

We plot the AUC against the number of individuals for each experiment in Fi-gure 1.3, with the colored dots representing experiments with identified issues. The colored dots show that there is a higher prevalence of studies with identi-fied issues among high-performance studies : a methodological issue has been identified in 18.5% of experiments with an AUC below 75%, whereas this pro-portion rises to 36.4% for experiments with an AUC of 75% or higher (signifi-cant difference, with p = 0.006). We can observe an upper-limit (shown in da-shed line) decreasing when the number of individuals increases, suggesting that high-performance achieved with a small number of subjects might be due to over-fitting. This phenomenon has already been identified by ARBABSHIRANI et al. (2017). A lower limit is also visible, with the AUC increasing with the number of individuals. This may reflect the fact that, on average, methods generalize bet-ter when correctly trained on larger data sets. But it might also suggest that it is harder to publish a method with a relatively low performance if it has been trained on a large number of subjects, such a paper being then considered as reporting a negative result. Within papers also, authors tend to focus on their best performing method, and rarely explain what they learned to achieve this. As the number of subjects increases, the two lines seem to converge to an AUC of about 75%, which might represent the true performance for current state-of-the-art methods.

Figure 1.3 seems to highlight possible unconscious biases in the publications of scientific results in this field. It might be considered more acceptable to pu-blish high-performance methods with small sample size than a low-performance method with large sample size. First, we think that low-performance methods trai-ned on large sample size should be published also, as it is important for the field to understand what works and also what does not. In particular, we think that we, as authors, should not only focus on our best performing method, but report also other attempts. Second, it might not be such a problem that innovative me-thodological works that do not result in a higher performance are published also, provided that the prediction performance is not used to argue about the interest and validity of the method. The machine learning field has the chance to have simple metrics, such as AUC or accuracy, to compare different methods on an ob-jective basis. However, we believe that one should use such metrics wisely not to discourage the publication of innovative methodological works even if it does not yield immediately better prediction performance, and not to overshadow the need to better understand why some methods work better than others.

Use of features of test subjects

Feature embedding is performed on the whole data set in 6.8% of experiments, meaning that the features of the test individuals are used for feature embedding during the training phase. As the diagnosis of the test individuals is often not used for feature embedding, as it is for feature selection, performing it on test indivi-dual can be considered a less serious issue than for feature selection. It however requires to re-train the algorithm each time the prediction has to be made on a new individual, which is not suited for a use in clinical practice.

Design of the decision support system and metho dological issues

In 5.6% of the experiments, the date of AD diagnosis is used to select the input visit of pMCI individuals, for training and testing. As explained in section 1.2.3, this practice can prevent the generalization of the method to the clinical practice, as the progression date of test individuals is by definition unknown.

These type of experiments answer the question « may one detect some charac-teristics in the data of a MCI patient 3 years before the diagnosis which, at the same time, is rarely present in stable MCI subjects ? ». Which should not be confu-sed with : « can such characteristics predict that a MCI patient will progress to AD within the next 3 years ». What misses to conclude about the predictive ability is to consider the MCI subjects who have the found characteristics and count the proportion of them who will not develop AD within 3 years.

This confusion typically occurred after the publication of DING et al. (2018). The paper attracted a great attention from general media, including a post on Fox News (WOOLLER, 2018), stating “Artificial intelligence can predict Alzheimer’s 6 years earlier than medics”. However, the authors state in the paper that “final clinical diagnosis after all follow-up examinations was used as the ground truth label”, thus without any control of the follow-up periods that vary across subjects. Therefore, a patient may be considered as a true negative in this study, namely as a true stable MCI subject, whereas this subject may have been followed for less than 6 years. There is no guarantee that this subject is not in fact a false negative for the prediction of diagnosis at 6 years.

Temporal regression and stacking

In a second approach, we perform a time linear regression to predict the next time point of each cognitive score. The next time point is thus predicted using all the previous time points of the given subject, as one linear regression is trained for each subject, hence allowing the inclusion of a different number of past visits in each one. This prediction of the cognitive scores is then combined with the predic-tion performed in the cross-sectional framework by stacking them in one feature vector, and this vector is then used to predict the corresponding diagnosis. This approach is referred to as the stacking approach, and is described in Figure 2.1 B. (b).

Rate of change approach

In a third approach, we compute the rate of change of all the input features between the two last visits. The prediction of the next time point is then performed using the input features and their rate of change, using a ridge regression. The diagnosis prediction is performed as described in the cross-sectional framework. This approach, referred to as the rate of change approach, is described in Figure 2.1 B. (c).

Validation procedure

Performance measures are obtained by splitting the cohort 50 times into a trai-ning (70%) and test (30%) set. The parameters of the ridge regression and SVM are tuned within a nested 5-fold cross validation. As the cross-validation provides 50 performance measures for each method, the mean performance and its standard deviation are computed. Two-tailed t-tests are used to compare method perfor-mance. As a comparison with our method, we predict the diagnosis at time t+Dt by using a linear SVM on the features at time t directly.

TADPOLE challenge

The TADPOLE challenge consists in the prediction of future clinical status, ADASCog score and ventricle volume in rollover individuals in the ADNI study. Participants were asked to make monthly predictions from January 2018 to De-cember 2022. The previously described framework was designed to make predic-tions 1 year after the last visit, and is easily extended to make predictions at a Dt interval. Several of such methods are trained in order to predict the future of the cognitive scores at time points 6 months apart for each subject. Monthly predic-tions of the cognitive scores are then obtained using linear interpolation, and the monthly values are used as input for the classification. This extension allows to ob-tain monthly predictions for each subject. The prediction of the ventricle volume was performed using the same method as for cognitive score prediction. The prediction of the diagnosis was evaluated using the multiclass area under the receiver operating curve (mAUC), defined in HAND et TILL (2001).

Table of contents :

1 Predicting the Progression of Mild Cognitive Impairment Using Machine Learning : A Systematic and Quantitative Review

1.1 Introduction

1.2 Materials and Method

1.2.1 Selection process

1.2.2 Reading process

1.2.3 Quality check

1.2.4 Statistical analysis

1.3 Descriptive analysis

1.3.1 A recent trend

1.3.2 Features

1.3.3 Algorithm

1.3.4 Validation method

1.4 Performance analyses

1.4.1 Features

1.4.2 Cognition

1.4.3 Medical imaging and biomarkers

1.4.4 Combination of different imaging modalities

1.4.5 Longitudinal data

1.4.6 Algorithms

1.5 Design of the decision support system and methodological issues .

1.5.1 Identified issues

1.5.1.1 Lack or misuse of test data

1.5.1.2 Performance as a function of data set size

1.5.1.3 Use of features of test subjects

1.5.1.4 Use of the diagnosis date

1.5.1.5 Choice of time-to-prediction

1.5.1.6 Problem formulation and data set choice

1.5.2 Proposed guidelines

1.6 Conclusion

2 Prediction of future cognitive scores and dementia onset in Mild Cognitive Impairment patients

2.1 Introduction

2.2 Materials and methods

2.2.1 Cross-sectional framework

2.2.1.1 Description

2.2.1.2 Inclusion of additional features

2.2.2 Longitudinal frameworks

2.2.2.1 Averaging approach

2.2.2.2 Temporal regression and stacking

2.2.2.3 Rate of change approach

2.2.3 Experimental setup

2.2.3.1 Data set

2.2.3.2 Validation procedure

2.2.4 TADPOLE challenge

2.3 Results

2.3.1 Cross-sectional framework

2.3.1.1 Proposed approach

2.3.1.2 Additional features

2.3.1.3 Building regression groups

2.3.2 Longitudinal frameworks

2.3.2.1 Averaging approach

2.3.2.2 Stacking approach

2.3.2.3 Rate of change approach

2.3.3 Prediction at different temporal horizons

2.3.4 TADPOLE challenge

2.4 Discussion

2.4.1 Cross-sectional experiments

2.4.2 Longitudinal frameworks

2.4.3 Interpretability

2.4.4 TADPOLE challenge

2.5 Conclusion

3 Reduction of Recruitment Costs in Preclinical AD Trials : Validation of Automatic Pre-Screening Algorithm for Brain Amyloidosis

3.1 Abstract

3.2 Introduction

3.2.1 Background

3.2.2 Related works

3.2.3 Contributions

3.3 Materials and Methods

3.3.1 Cohorts

3.3.2 Input Features

3.3.3 Algorithms

3.3.4 Performance Measures

3.4 Results

3.4.1 Algorithm and feature choice

3.4.1.1 Algorithm choice

3.4.1.2 Feature selection for cognitive variables

3.4.1.3 Use of MRI

3.4.2 Use of longitudinal measurements

3.4.3 Proposed method performance

3.4.3.1 Cost reduction

3.4.3.2 Age difference between groups

3.4.3.3 Training on a cohort and testing on a different one

3.4.3.4 Representativity of the selected population

3.4.4 Building larger cohorts

3.4.4.1 Pooling data sets

3.4.4.2 Effect of sample size

3.5 Discussion

3.5.1 Results of the experiments

3.5.1.1 Algorithm and feature choice

3.5.1.2 Method performance

3.5.1.3 Data set size

3.5.2 Comparison with existing methods

3.5.2.1 Univariate approaches

3.5.2.2 Other multivariate approaches

3.6 Conclusion

4 Use of psychotropic drugs throughout the course of Alzheimer’s disease : a large-scale study of French medical records

4.1 Introduction

4.2 Materials and methods

4.2.1 Cohort description

4.2.1.1 Description

4.2.1.2 Group definition

4.2.1.3 Patient overview

4.2.2 Studied treatments

4.2.3 Descriptive and predictive analysis of treatment history

4.2.3.1 Statistical analysis

4.2.4 Predictive model

4.3 Results

4.4 Discussion

4.4.1 Risk factors

4.4.2 Prediction

4.4.3 Management practices

4.4.4 Strengths and weaknesses of the study

4.5 Conclusion

Conclusion & Perspectives

A Supplementary materials for the systematic and quantitative review

A.1 Query

A.2 Selection process diagram

A.3 Reported items

A.4 Journals and conference proceedings

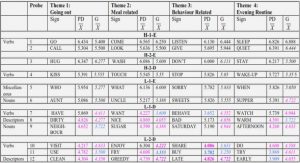

A.5 Information table

B Supplementary materials for amyloidosis prediction

B.1 Computing R and S from the PPV and NPR

B.2 Difference of age in the 3 cohorts

B.3 Algorithm pseudo-code

C Supplementary materials for the study of treatment prescriptions

C.1 Statistical analysis

C.1.1 Model description

C.1.2 Coefficient interpretation

C.1.3 Intercept of the non-AD group

C.1.4 Slope of the non-AD group

C.1.5 Intercept change for the AD group

C.1.6 Slope change for the AD group

C.1.7 Impact of diagnosis on the intercept

C.1.8 Impact of diagnosis on the slope

C.2 Predictive model

C.2.1 Performance measures

C.2.2 Results optimized for screening